摘要

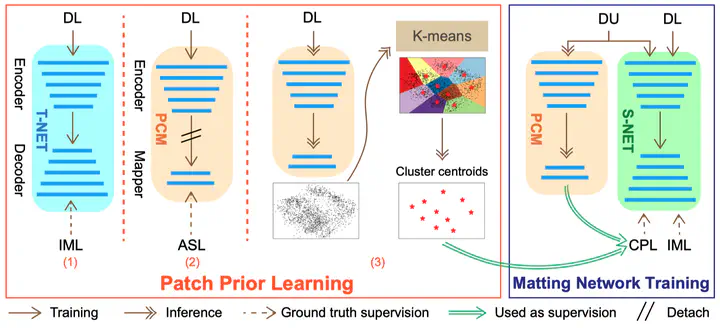

Image matting aims to extract the accurate foreground opacity mask for a given image. State-of-the-art approaches are usually based on encoder-decoder neural networks and require a large dataset with ground-truth alpha matte to facilitate the training process. However, the alpha matte annotation process is extremely time-consuming and labor-intensive. To lift such a burden, we propose a novel deep learning-based weakly supervised image matting method. It can simultaneously utilize data with and without ground-truth alpha mattes to boost the matting performance. The key idea is to exploit the patch-wise similarity of the alpha mattes without explicitly relying on ground-truth alpha mattes. To this end, we design a novel patch clustering module to cluster patches with similar alpha mattes and subsequently propose a new loss function to supervise the matting network by utilizing the clustering prior. Experimental results show that our proposed method can effectively cluster image patches by their corresponding alpha patches’ similarity and improve the matting performance. To our knowledge, our method is the first to tackle the weakly supervised image matting problem with only trimaps as the annotation.